groupBy() vs groupByKey()

In this post, I would like to cover about two grouping operations on RDD.Which operation should I use? groupBy() or groupByKey() ?.This is a common question every newbies would get when he come across a scenario where data to be grouped based on some key.

These operations are mostly suitable for data that has key-value structure. You should avoid this operations when your intention is beyond just grouping. You can consider much more efficient reduceByKey() or combineByKey() as an alternative for grouping plus aggregation scenarios.Before we get into the difference, let's first have a quick overview on each of the operations.

GroupBy()

GroupBy is to group data together which has same key and is a transformation operation on RDD which means its lazily evaluated.This is a wide operation which will result in data shuffling hence it a costlier one.This operation can be used on both Pair and unpaired RDD but mostly it will be used on unpaired.This let the programmer to explicitly mention the key to group.

val baseRdd = sc.parallelize(Seq((1, 2), (1, 3), (2, 1), (2, 2), (2, 3))) baseRdd.groupBy(_._1)

GroupByKey()

GroupByKey is also to group data together which has same key but this is meant only for Pair RDD. Meaning programmer has no way to explicitly mention the key field to group like groupBy. So this operation is specialised for RDD which already defined its key. As like groupBy(), this also a transformation, wide and costlier operation in nature. Important thing to be noted here is groupByKey() always results in Hash-Partitioned RDDs. Let's cover this aspect very shortly.

val baseRdd = sc.parallelize(Seq((1, 2), (1, 3), (2, 1)(2,2), (2, 3))) baseRdd.groupByKey()

Now Differences

I can hear what you are thinking “then whats the difference between them apart from syntactical ?”. Well, the intention of my article is to show you just that and let's focus now onwards on differences. Let me explain you with an example.

val data = for { x <- 1 to 200000 y <- 1 to 20 } yield (x, y) val baseRDD = sc.parallelize(data) baseRDD.groupBy(_._1).take(1)

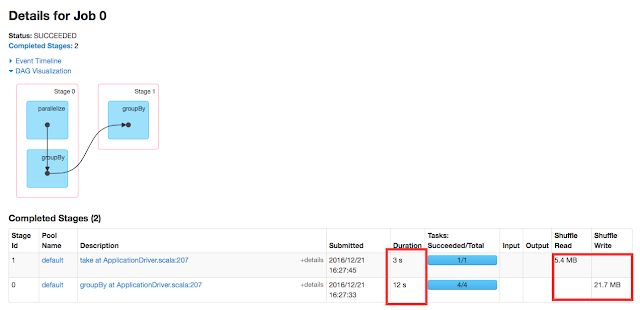

When you execute the above job (code) with 2 core node, we get this performance metrics from Spark UI.

Now let's modify the job with groupByKey() and check the results

val data = for { x <- 1 to 200000 y <- 1 to 20 } yield (x, y) val baseRDD = sc.parallelize(data) baseRDD.groupByKey().take(1)

Conclusion

Got it? groupBykey() gives roughly 4secs performance advantage over groupBy().Also look the read and write data shuffle difference.So clearly groupByKey() is more efficient than groupBy() and this significant performance gain is primarily because of Hash-Partitioned implementation.So when you get the scenario of just grouping, then go ahead with groupByKey.

Hi Balaji

ReplyDeleteArtArti is super. But due to page background fonts are not vivisib clearcl while reading in mobile . Please fix it

Hello, Thank you for your constructive feedback . The correction has been done and let me know if you still find any formatting issue.

DeleteMm

ReplyDeleteThank you Teju for your kind words.

ReplyDeleteReally good way of explaining the concept

ReplyDelete