zip()

zip(...) is a simple and interesting operation which can be considered as general purpose operation. Basically it returns key-value pairs (ZippedPartitionsRDD2) with the first element in each RDD, second element in each RDD, etc. It is an element-wise, narrow transformation operation. Following example demonstrates the above definitions.

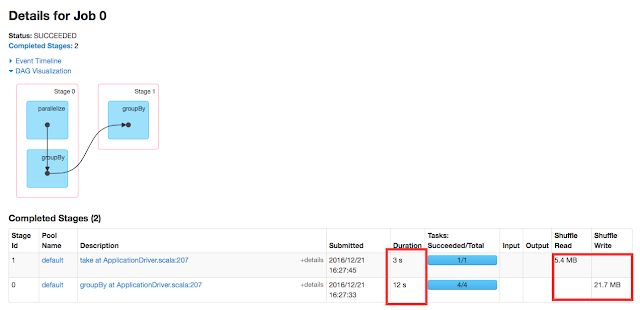

The above result shows that, first element of rdd1 is zipped with first element of rdd2 and so on.Also the DAG confirms that zip(...) is a narrow operation. If you look at the code, you could find both rdd1 and rdd2 has same number of record counts but interesting point here is, following error will be thrown if your rdds has different record counts.

val rdd1 = spark.sparkContext.parallelize(Seq(1, 2, 3, 4, 5)) val rdd2 = spark.sparkContext.parallelize(Seq(6, 7, 8, 9, 10)) rdd1.zip(rdd2).foreach(println(_))Result

(1,6) (2,7) (4,9) (5,10) (3,8)DAG

The above result shows that, first element of rdd1 is zipped with first element of rdd2 and so on.Also the DAG confirms that zip(...) is a narrow operation. If you look at the code, you could find both rdd1 and rdd2 has same number of record counts but interesting point here is, following error will be thrown if your rdds has different record counts.

Caused by: org.apache.spark.SparkException: Can only zip RDDs with same number of elements in each partitionNow let's explore syntactical aspect of the operation.

public <U> RDD<scala.Tuple2<T,U>> zip(RDD<U> other, scala.reflect.ClassTag<U> evidence$10)The function takes, another rdd as an input and returns RDD of Tuple [ZippedPartitionsRDD2]. The next question would come to your mind is about partitions. Resultant rdd of zip(...) operation will not retain any Partitioner even if the parent rdds did.

Comments

Post a Comment