Reduce() Operation

In this blog post, let's discuss about reduce() operation in Spark.A brief background about reduce() operation before we jump into Spark flavour of the operation. reduce() in general is an aggregate or a combiner operation. It uses the input function to combine the collection of data together and returns a single value as result.The input function takes two parameters.

- Accumulator

In each iteration, the function combines/aggregates the two parameters and keeps the result in the accumulator parameter. - Current

This argument represents the current value of the iteration.

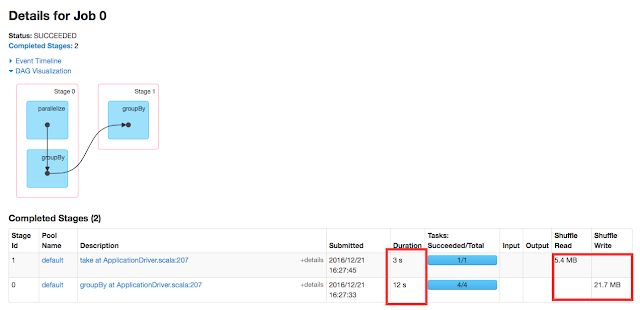

With the given understanding, let's start explore reduce() in Spark. It is an action operation which will be executed on the result of one or more transformation operations such as map().It is a wide operation .In the sense , it will shuffle the data across the partitions in the cluster.

reduce() operation takes a function which is associative and commutative in mathematical nature.Lets see it with a simple example.

val addOperation = (accum: Int, current: Int) => accum + current val baseRDD = sc.parallelize(1 to 100) val result = baseRDD reduce(addOperation) println(result)

Result

5050

In the example, baseRDD has range value from 1 to 100. And with the reduce operation, we are trying to figure out answer for the question 1+2+3+…100 = ? . It is important to remember that first argument in the input function is accumulated value and that will be the returned as end result.

Comments

Post a Comment